Zhang, Y., Chen, G., Vukomanovic, J., Singh K.K., Liu, Y., Holden, S., & Meentemeyer, R.K. (2020). Recurrent Shadow Attention Model (RSAM) for shadow removal in high-resolution urban land-cover mapping. Remote Sensing of Environment, 247, 111945.

Abstract

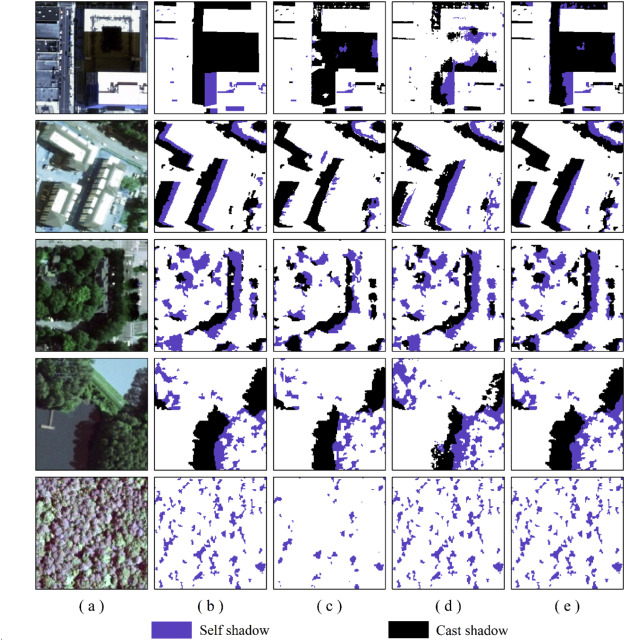

Shadows are prevalent in urban environments, introducing high uncertainties to fine-scale urban land-cover mapping. In this study, we developed a Recurrent Shadow Attention Model (RSAM), capitalizing on state-of-the-art deep learning architectures, to retrieve fine-scale land-cover classes within cast and self shadows along the urban-rural gradient. The RSAM differs from the other existing shadow removal models by progressively refining the shadow detection result with two attention-based interacting modules – Shadow Detection Module (SDM) and Shadow Classification Module (SCM). To facilitate model training and validation, we also created a Shadow Semantic Annotation Database (SSAD) using the 1 m resolution (National Agriculture Imagery Program) NAIP aerial imagery. The SSAD comprises 103 image patches (500 × 500 pixels each) containing various types of shadows and six major land-cover classes – building, tree, grass/shrub, road, water, and farmland. Our results show an overall accuracy of 90.6% and Kappa of 0.82 for RSAM to extract the six land-cover classes within shadows. The model performance was stable along the urban-rural gradient, although it was slightly better in rural areas than in urban centers or suburban neighborhoods. Findings suggest that RSAM is a robust solution to eliminate the effects in high-resolution mapping both from cast and self shadows that have not received equal attention in previous studies.